Overview

Last night, on Thursday February 15, 2024, my group and I were at N@TM to show our project to interested people. We ended up getting quite a lot of people to come to our table! This blog explains what we plan to do with our project in the long term as well as detail some of the things that went on in the event and the kinds of projects we checked out.

Two Trimester focus planning

How you used N@tM to capture test data to be used in trimester 3?

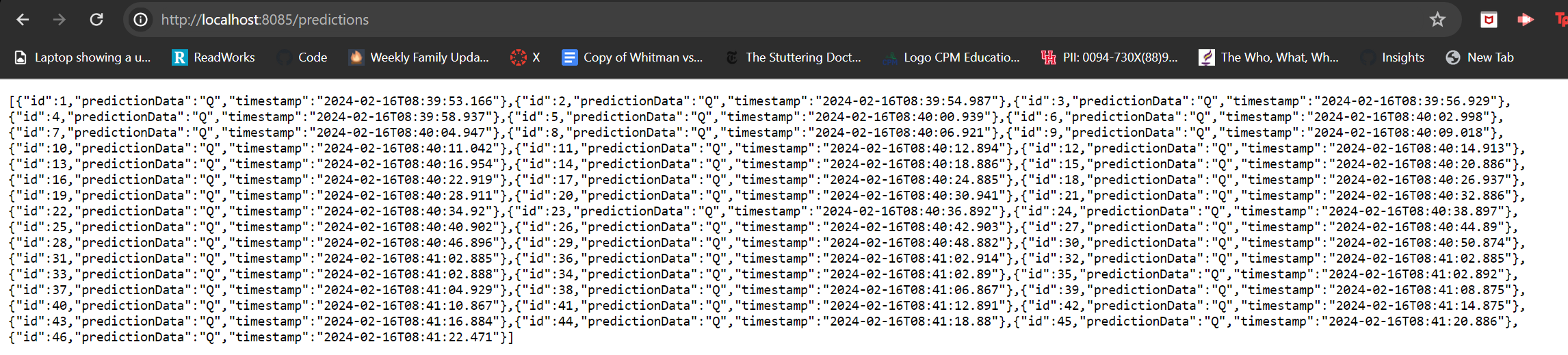

Because we got a lot of interested people to come to our table, they ended up playing a lot of the rounds of the ASL game, which in turn added more points to their score on the leader board as well as provide more image data for the predictions API controller. All of this test data will be very useful in making sure that the training model becomes more and more accurate over time as it continues to capture the frames of the screen every few seconds. The data we obtained from N@TM helps us see the accuracy of the model by looking at the letter it predicts, and based on that, all of us can work together can ensure that the prediction is as accurate as possible.

From feedback, something you will add to your project? Other?

One thing that we noticed is that when interested people starting playing the game, they did not know that they were required to put their hand up to the screen and we ended up having to tell them this while they were playing. Ideally, the instructions should have this included in them, which is why I think it would be important for me to modify the instructions so that they are a bit more clear for users. Another thing that we thought about was of course making the model more accurate (covered in more detail in the next section of this blog), as at times the model could mark the person right when they were wrong and vice versa.

Blog / article on you and team

Reflection on glows/grows from your demo/presentations

Overall, I had an amazing time at N@TM because it gave me the opportunity to not only enjoy with my group mates but also with some of my friends who also happened to have the same shift as us. It was also fun watching a lot of our interested people try to play the game, as all of them seemed to have fun with how challenging it can be sometimes to copy sign language. All of our test users seemed to very engaged with our project and even as we explained what the backend data looked like. The fact that everyone was also super interested in the backend data was quite surprising considering the fact that all those variables and numbers may appear very confusing with how many the model was capturing at once.

That being said, there were also a few areas of improvement in our demos. For example, the model that we used for the games and the frames definitely could have been much more accurate. One of the things that we noticed was the fact that most of the letters that the model predicted were either “Q”, “M”, or “J”, and while those could have definitely been some of the letters that the user tried to show, they are most definitely not the only letters. Afterwards we as a group tried it out and saw that no matter what letter we showed to the screen, it would still display the same predictions for the letters. This explained why for the most part, the points did not update as much as we expected since according to the model, what the user showed to the camera was incorrect. In order to improve the model, we will need to make adjustments to the model (ex. learning rate) so that it is more accurate to what the user is showing and perhaps provide the model with more training examples. Both of these things will make sure that our model is truly accurate and can accurately decide if the user’s gesture is correct or incorrect.

Another thing we could improve on is of course getting the backend deployed, as we weren’t able to due to the servers breaking and due to some too many requests being created from the quiz we had earlier this week.

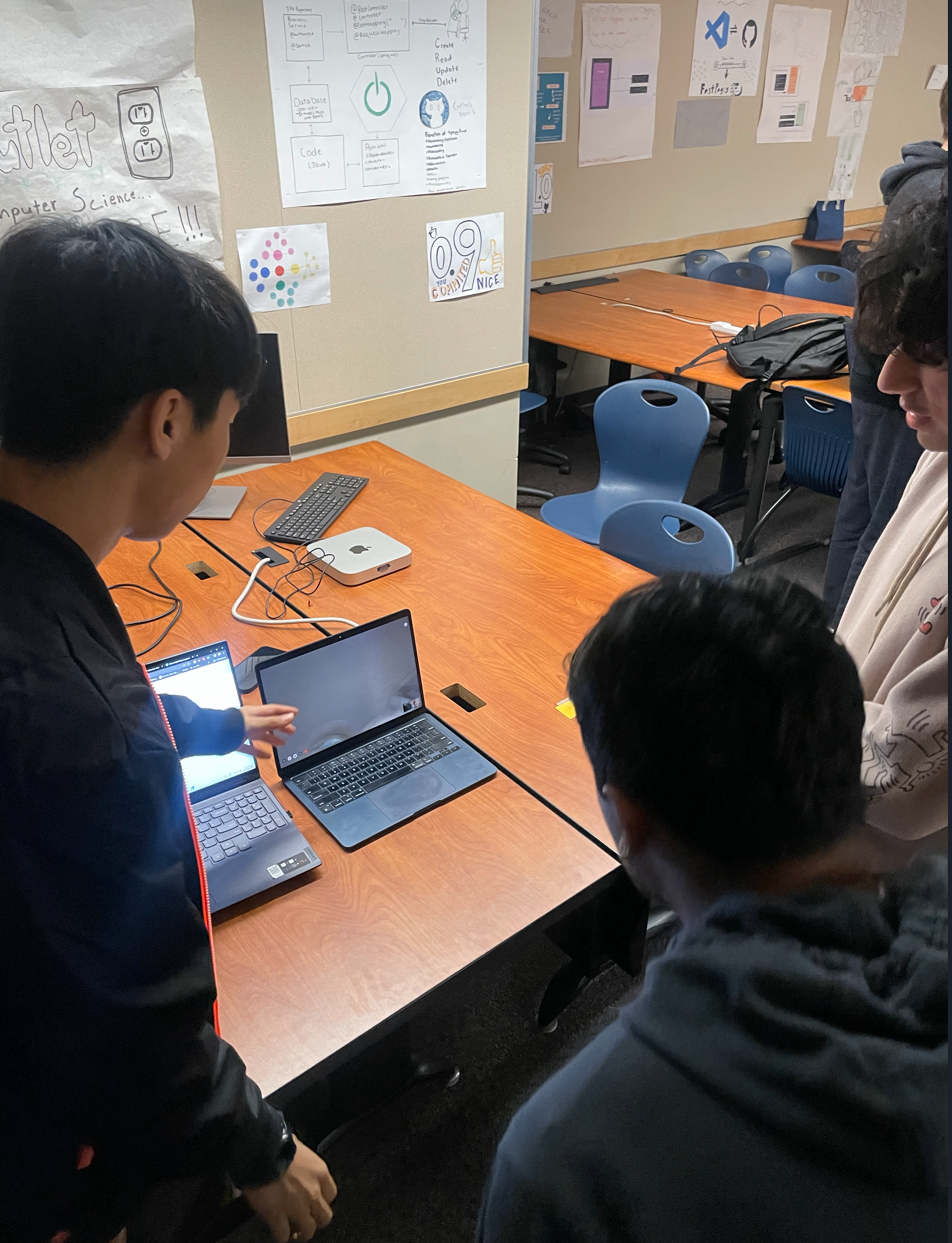

Visuals or pics of team and people you talked

Unfortunately, Anthony had to go to a Junior Olympics competition, so he was not able to make it to the event in person. However, he still was willing to come to the event through FaceTime and talk from there, as you can see in the above image!

This was the first group of our many interested users that we showed our project to. We started by explaining to them what the game was and why we made it (which was of course to help raise awareness on people who are hard of hearing). We gave them the opportunity to play it themselves a few times, as shown in both this image and the image below.

We took turns on who would explain the game, leaderboard, database, etc. and we all as a whole did an amazing job. The image below is of me specifically explaining the game to them as well as what is going on behind the scenes:

Here you can see some of our first test users of our project. They all had a really good time playing the game!

We of course had a few techy users who came with some techy questions (all of which we could answer). Most of the users were still there just to be able to play, and even if they had no idea what was going on with our backend data base, they all seemed to enjoy it very much.

Also just for fun this is what our backend database looked like:

Lots of Q’s, am I right?

Blog on event

something you saw in CompSci that impressed

This was a project that I found pretty interesting from CSP. As shown in the image, the project is centered around the mobile game Clash Royale and its many decks of cards. Essentially how the project works is that you select 8 cards from many many different cards, and once you submit your choices and then go to your “Favorites” page, you will see those same 8 cards pop up on the page. It was interesting to me because of how it was not only built around Clash Royale but also because of the kinds of elements that they used to put this project together.

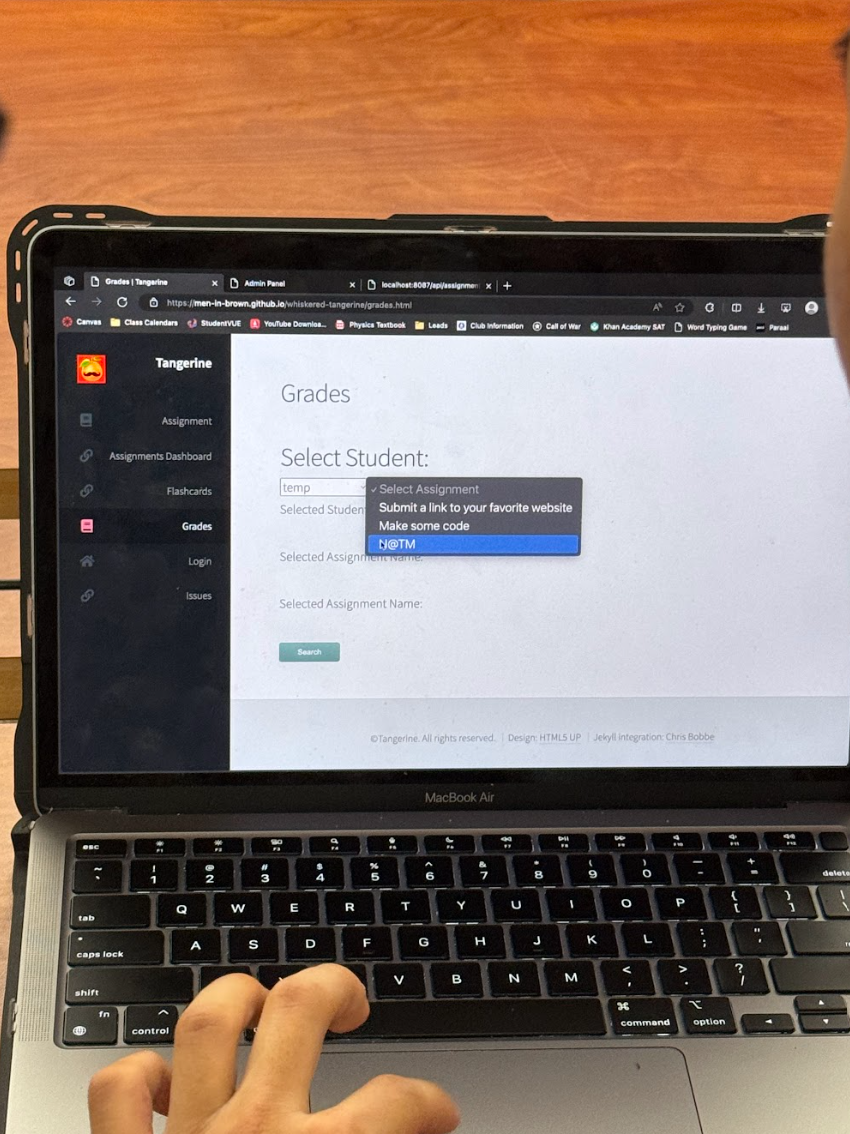

This happened to be a CSA project from third period, and the idea behind this game was that you could add your own assignments or homework and potentially get them graded. I really like the design of the page, as it was very aesthetically pleasing to me and the many others who tested the project out. I could tell a lot of hard work was put into it while also being able to tell that this group enjoyed creating this project. I saw a lot of people gather around for this project, so I am sure those people thought the same thing as me.

something you saw outside of CompSci that you liked

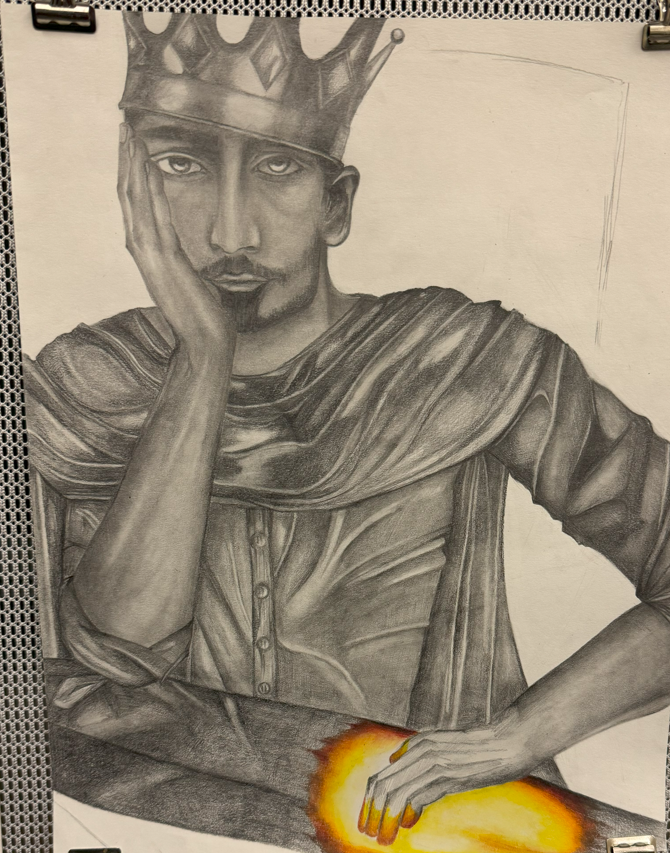

I chose to include all three of these in my blog because they were the ones that amazed me the most in terms of how realistic and detailed it was. I have done something similar to all three of these, except rather than doing it by hand I used a software called Blender to model objects. For me, Blender was much easier to work with and I could make all the adjustments I wanted automatically with just a click. With the kind of art that is shown above (from AP Studio Art, Drawing and Painting), I would imagine it is much harder to do that and requires a lot more dedication and effort to make the drawing appear as real as it did at last night’s event.

N@tM Reflection

I had a really good time looking at work from not only our class, but also work from other electives such as 3D Animation, Ceramics, and Design and Mixed Media. Regardless of where the projects came from, looking at them made me amazed at how talented our school is and how we have the ability to put out work like this. I was especially interested in the projects from 3D Animation, as I myself have taken the class and remember some of the projects that we had to do. Looking at the work from that class made me think about what I could have done better when I was in that class, which will definitely be helpful if I plan on becoming a 3D artist in the long run. I also enjoyed being able to look at all of these projects with other people, as doing so gave us the opportunity to discuss what we thought about them. Overall, N@tM for Trimester 2 was an amazing experience and I look forward to continuing the project for trimester 3.